blog

The “B” in USB stands for “Bad”.

by Opus & Daniel C

The objective of this research is to evaluate HID (Human Interface Device) attack techniques and examine known detection and prevention methods. We have utilized the O.MG Cable as a representative example of a highly elusive and incredibly effective and well-designed attack tool, and we have analyzed several other tools for comparative purposes.

This document will:

-

List and detail current approaches to detecting HID attacks, explaining why many of these approaches are either infeasible or ineffective.

-

Evaluate the O.MG Cable in terms of its features, detection-evasion capabilities, and potential detection methods.

This research does not cover:

- Methods for evaluating the runtime context of malicious commands. If a tool can execute a command, the attack is considered successful regardless of the command’s specifics.

- Detection of wireless devices, such as Bluetooth or WLAN, as this requires separate research encompassing a broader range of devices beyond HID attacks.

The devices analyzed in this research include:

- A standard desktop keyboard: HP KU-1156.

- The same keyboard modified with a custom implant (briefly described below).

- Rubber Ducky hardware Mark I.

- O.MG Cable.

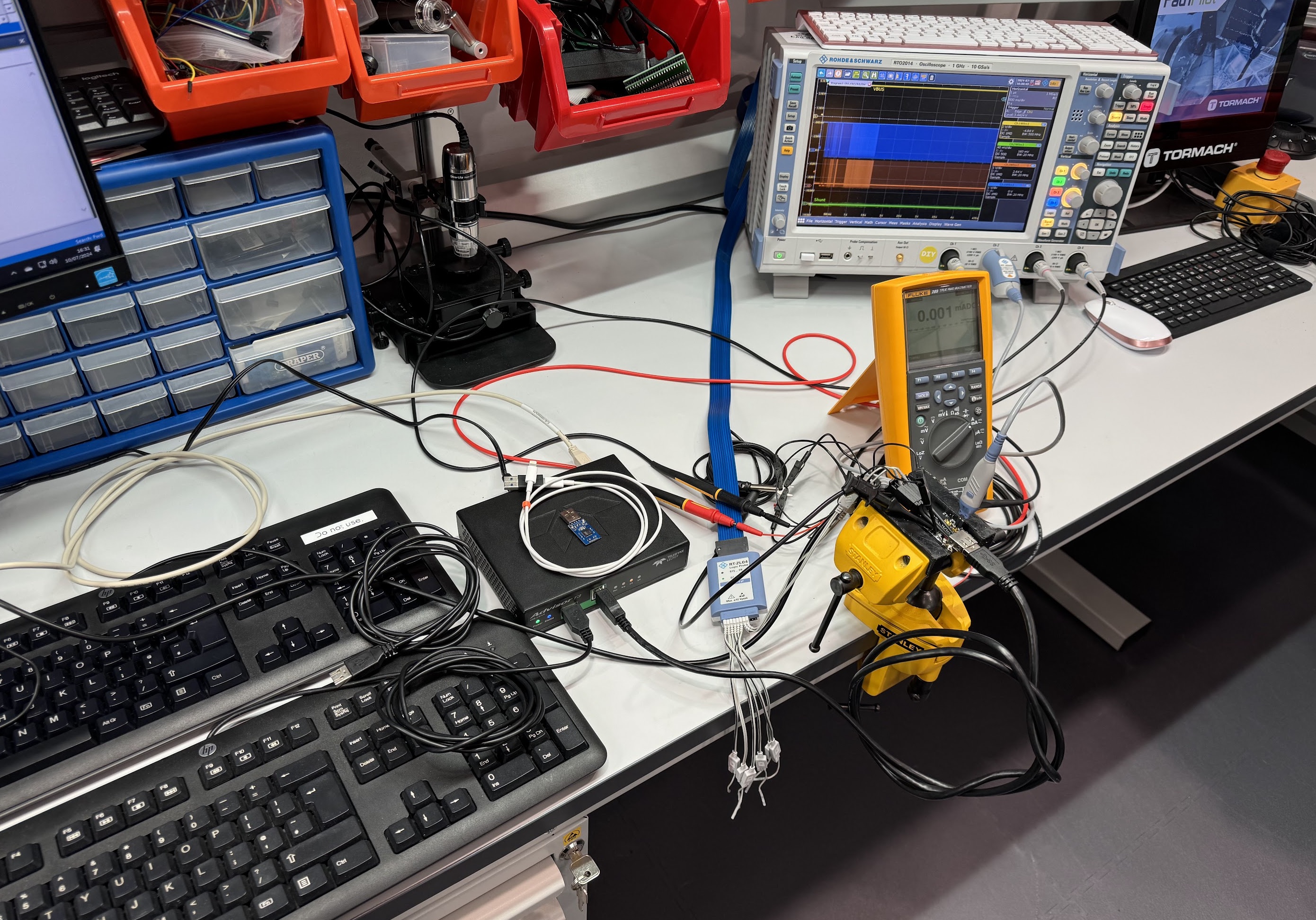

The following equipment was used during this research:

- Teledyne LeCroy Advisor T3 USB analyser,

- Rohde & Schwartz RTO2014 oscilloscope,

- Fluke 289 DMM,

- Tormarch xsTech Router for milling the breakout PCB.

It is crucial to note that, in our view, there is currently no universal method to prevent such attacks. A simple verification approach involves checking whether a workstation can detect and alert (preferably through user notifications or Endpoint Protection Platforms (EPP)) when two USB keyboards of the same make and model are connected simultaneously. If the workstation does not generate alerts under these conditions, it is considered vulnerable.

Implementing a successful attack under these circumstances is merely a matter of engineering and operational execution.

Overview of HID attacks.

We are not presenting a comprehensive history of this attack method; suffice to say that it was possible since inception of USB-based HIDs (Human Interface Device, one of high-level classes of USB devices), where the only limitation was availability of programmable microcontrollers that were able to emulate USB devices. Attacks of such type are commonly, but not formally, known as “BadUSB attacks” - a name derived from the (arguably) first device of such capabilities, presented at Black Hat USA 2014 conference: BadUSB - On accessories that turn evil. BadUSB itself was an extension of another, now moot, attack called USB Switchblade from 2006 in which USB storage devices leveraged Windows’ autorun.inf misconfiguration/vulnerability allowing automatic execution of programs from a USB-emulated CD-ROM drive.

BadUSB, and pretty much every device after up to today, follow the same, quite simple idea: since we have a USB keyboard, we can take the “USB” part of the keyboard and attach it to something that will “type” the keystrokes for us. Over the years, advancements in microcontroller technology, power management and remote connectivity allowed for more and more elaborate devices to be made. Typical USB devices made for offensive purposes are made to look like a regular USB Flash Drive (O.MG cable is a notable exception, we have also seen HID devices masked as USB-powered desktop fans), and from that, a very popular attack has been developed where victim users are given (or are made to find) such a device in hopes they will plug it into their workstation. When plugged in, device will present itself as a USB keyboard, mouse, or both, and execute keystrokes/mouse movements as programmed by the attacker. Advanced (but still cheap) devices use a scripting language called DuckyScript, developed for another BadUSB-type device from 2010 (still being actively developed today): USB Rubber Ducky allowing for extensive control of what is being typed, when, how fast, with what delay, and so forth.

However the above is enough of a threat and, in most cases, it is impossible to detect such attacks, even more troubling are attacks that are not public and are made-to-measure. One example of such attack, created for the purpose of explaining how serious (and how easy to perform) it can be. The attack was presented at BSides Wales 2019: How to be a Hardware Criminal: HID Attacks MAde with Spit and Tape (the how-to on this attack was not made public), and extended the idea of dropping a malicious USB device: in this attack, a genuine USB keyboard of a popular brand was implanted with a Man-in-the-Middle device between the internal USB controller and user’s workstation:

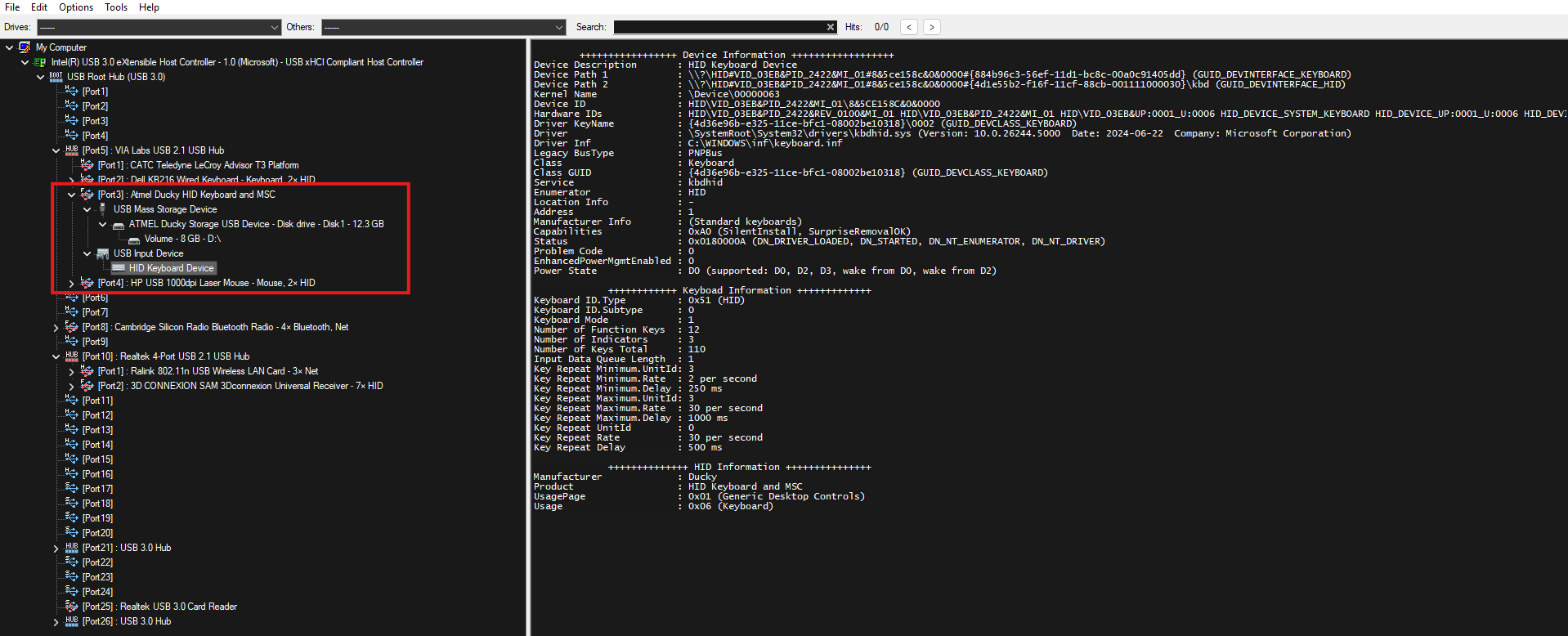

The implant was designed to act as a proxy between the keyboard’s USB controller and the user’s workstation. It functions as a host to the keyboard’s USB controller and as a keyboard’s USB controller to the workstation. This setup ensures that to the workstation, the device appears exactly like the original, unmodified keyboard, with all identifiers included in the USB enumeration being replicated. The implant has the capability to remotely capture and inject keystrokes over WiFi.

Software countermeasures.

This approach ignores any external devices put in line with an USB device, and only leverages OS level of access to enumeration and communication part of USB protocol.

Command Invocation (Run Command) via HID Devices.

All devices utilized in these attacks, regardless of their level of sophistication, share a common objective: executing a command on the target system. This is distinct from targeted attacks, where gaining visual access to a logged-in workstation and controlling the mouse and keyboard provides full control, assuming the user leaves the workstation unlocked. The primary requirement for achieving this objective is invoking a keyboard shortcut that opens a “Run command” window, a terminal on Unix/Linux systems, or an equivalent interface.

- Win + R

- Windows + X

- and similar [TO BE ADDED].

Unless user environment requires the user to invoke those shortcuts, disabling their use (or using a similar method to prevent launching commands directly fro a command prompt) stops the vast majority of HID attacks.

Mutiple HID devices.

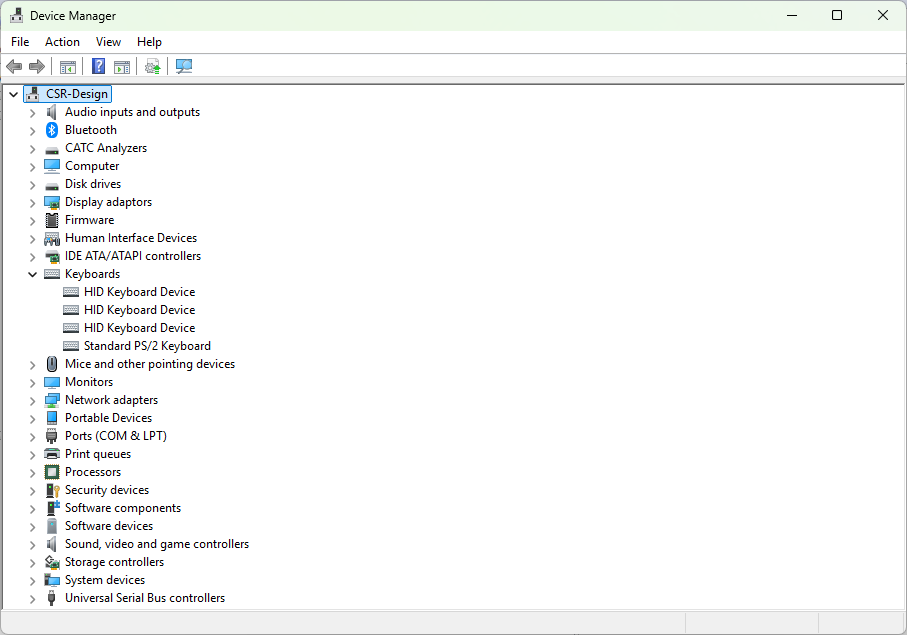

Most workstations only require one keyboard and one mouse, therefore if user presents the system with a secondary keyboard, it is definitely suspicious. However, this only applies to workstations as it is normal for a laptop to have external HID devices connected (and built-in keyboards and trackpads are, internally, connected to the USB bus in most cases). Although it would be possible to narrow down such check if the inventory of devices was known (that is, internal PID/VID combinations can be found out and excluded) it still remains a problem whether a HID device that just appeared is a legitimate, or malicious. Therefore, this method on its own cannot work properly. Also, some HID devices do present themselves as keyboards - in the example below from Windows’ Device manager we can see three “keyboards”: one is legitimate, one is malicious, and one is a 3D Connection Space Mouse used in CAD environments:

Categories of Malicious HID Devices and Detection Methods.

Malicious HID devices typically employ various obfuscation methods and can be categorized into three main types:

- Devices That Resemble USB Flash Drives: These devices present themselves in the system’s file explorer and function like regular USB storage devices.

- Devices Containing Only the HID Functionality: Similar in appearance to USB flash drives but exclusively functioning as HID devices.

- Devices Hidden in Other Objects: These include devices concealed within other peripherals, such as charging cables, exemplified by the O.MG Cable.

While methods to detect and handle the second and third categories are discussed in detail elsewhere in this document, detecting and reporting devices from the first category is relatively straightforward. Each hardware device in a system is identifiable by its physical location in the chain of devices (e.g., within a USB hub).

Keystrokes’ timings.

Keystroke timing analysis is a popular method for detecting, rather than preventing, HID attacks. This technique involves measuring the time between keystrokes, focusing on both the speed and the variations in speed. The underlying assumption is that maliciously injected keystrokes will be quick and uniformly spaced. However, these assumptions are becoming less valid due to advancements in attack tools like DuckyScript, which now support randomization of timing and speed adjustments (see DuckyScript - Randomization).

While solutions based on keystroke timing can effectively block malicious actions, the time required for detection is often too short to respond adequately. This is particularly true given the minimal number of keystrokes needed to execute an attack.

An example of a software solution utilizing keystroke timing analysis is detailed in the 2018 paper USBlock: Blocking USB-Based Keypress Injection Attacks. However, this approach has been partially obsoleted by the randomization features now available in modern attack tools such as the Bash Bunny, P4wnP1 A.L.O.A etc.

PIN entry.

Upon connecting a new keyboard, requiring the user to enter a PIN displayed on the screen is an effective method to prevent unknown keyboards (or malicious devices masquerading as keyboards) from broadcasting keystrokes without the user’s knowledge. This approach ensures that:

- The user is aware that they have connected a keyboard, as HID-attack devices typically disguise themselves as non-keyboard peripherals.

- The attacker, without visual access to the screen, cannot determine the PIN, effectively implementing a form of second-factor authentication.

Despite its effectiveness, this method is not widely implemented by major operating systems. Windows 11 Insider briefly included this feature, and Apple macOS has a similar mechanism where the user is asked to press certain keys to recognize the keyboard’s layout and language. However, this method introduces some friction:

- Requiring a PIN Every Time: Mandating a PIN entry each time a HID keyboard is connected could become an annoying feature, especially for desktop workstations where the user would need to enter a PIN before typing their credentials.

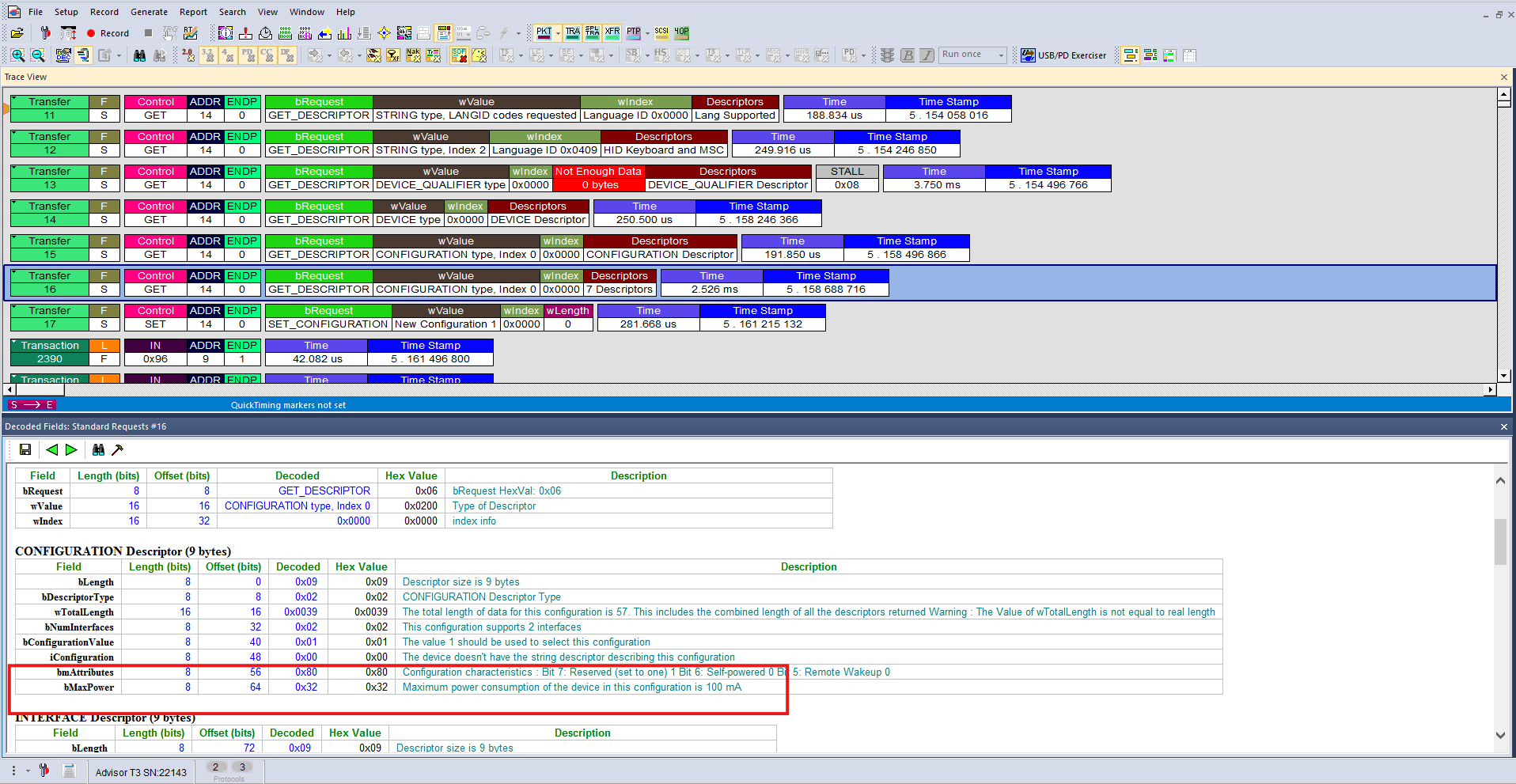

- Requiring a PIN for New Keyboards Only: This raises the issue of defining what constitutes a “new keyboard.” If the criteria are too lax, such as relying solely on PID/VID whitelisting, it becomes easy to circumvent, as demonstrated in the hardware section. Conversely, if the criteria are too strict, there is a risk of false positives, where legitimate keyboards are flagged, causing unnecessary inconvenience. This strictness may depend on factors like timings or individual packets during the USB enumeration phase.

The challenge lies in balancing security with user convenience. Implementing an effective yet user-friendly solution remains a significant hurdle, and further research and development are necessary to address these challenges adequately.

Friction is the enemy of security and should be avoided at all cost.

Hardware methods.

A possible hardware approach assumes using an external device between a USB device and host workstation. This, effectively, implies using a specialised docking station/USB hub connected to one workstation port while all other ports are disabled. Apart from design/manufacturing costs, this solution will not work for roaming users who, for example, work from a coffee shop, move around their office between meeting rooms, desks, and so forth.

Power Consumption Monitoring for HID Devices.

Just plug it in and look for deviations from the baseline that is power consumption.. easy!

This concept is one of the more popular ideas for detecting malicious devices, but it is primarily theoretical, as practical implementation is possible but costly. The idea stems from the obvious conclusion that a malicious device will likely draw more current than a typical keyboard. Specifically, if a device identifies itself as a HID keyboard but draws more current than expected (especially if it utilizes remote connectivity), it should be straightforward to flag such a device.

However, there is a significant misunderstanding related to this approach. End-user devices often present messages about overcurrent situations on a USB port or other error states related to the energy drawn by connected devices. This can be misleading because USB ports and their controllers do not have current-measuring capabilities; they do not need them. Instead, the host relies on the USB device’s declaration of how much current it will draw.

There is a general over-current protection mechanism designed to safeguard the USB controller and host system from short circuits and similar events. However, it is important to note that there is no actual current-measuring capability available to end users.

To enhance this method, a custom interface can be employed. Such an interface, similar to devices that display current values and are generally available, can implement current sensing for every USB device connected to it. This, however, introduces a race condition: to prevent an attack, the device must be allowed to enumerate (so the measuring interface knows it is a keyboard) but must be prevented from sending actual data if deemed malicious.

This approach necessitates the measuring device to not only measure and analyze current but also to function as a USB proxy. It must present itself as a host to the analyzed device and as a peripheral to the end-user workstation. Essentially, it acts as an intermediary, controlling the communication based on the current draw analysis.

To facilitate this, we used a custom-made USB breakout board, providing access to all lines and enabling current measurement via a shunt resistor.

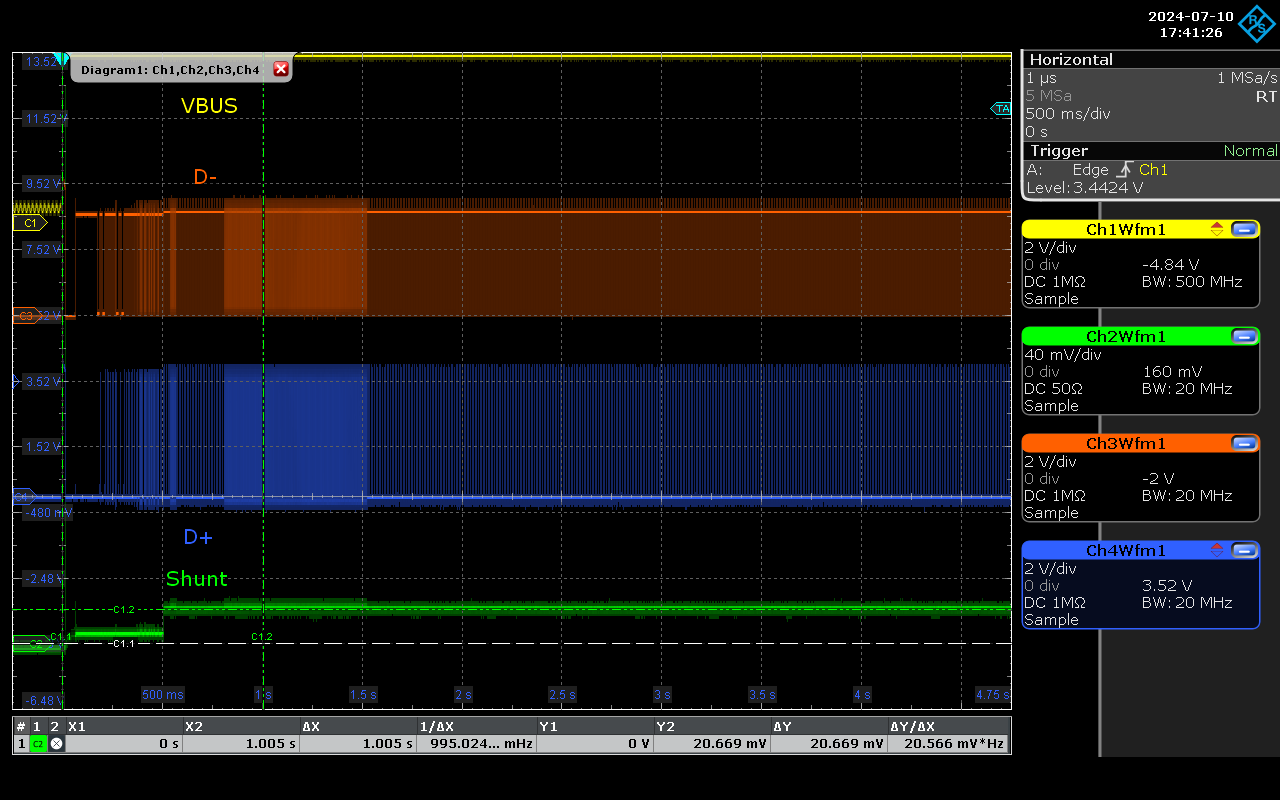

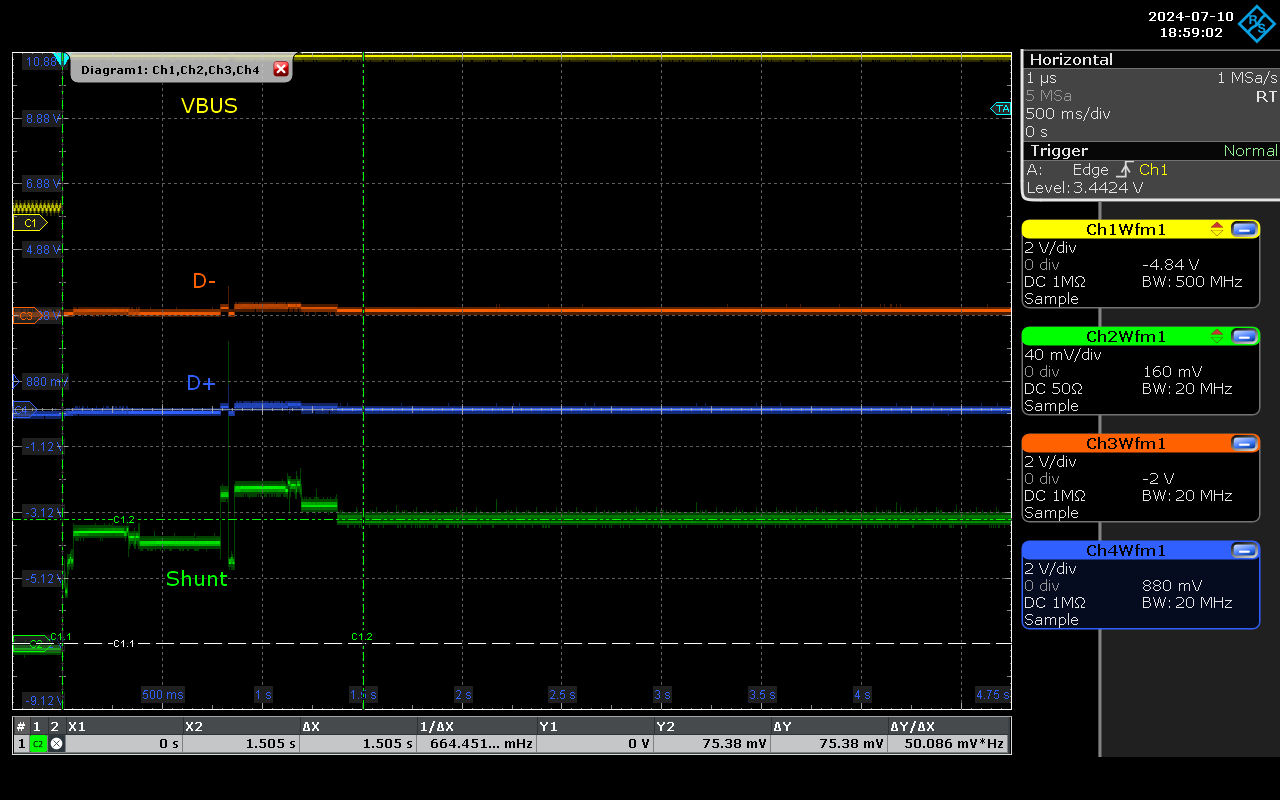

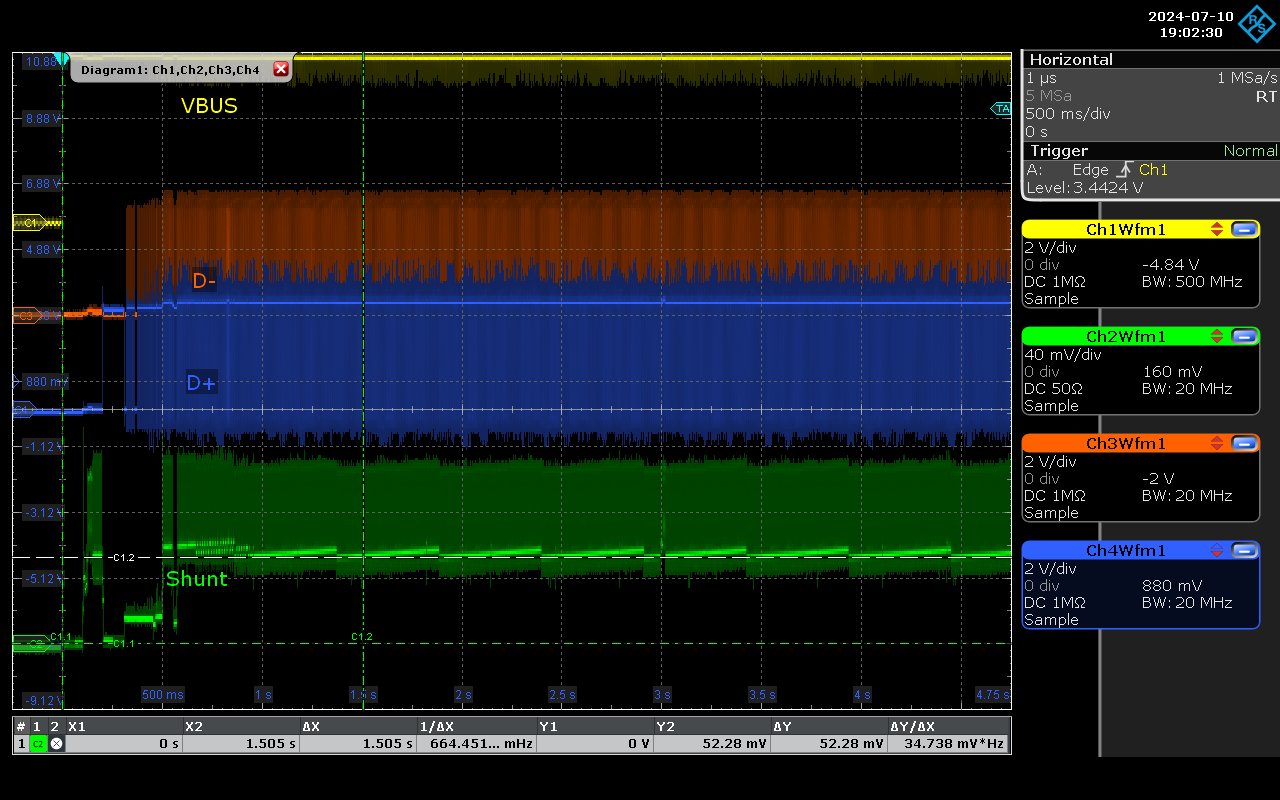

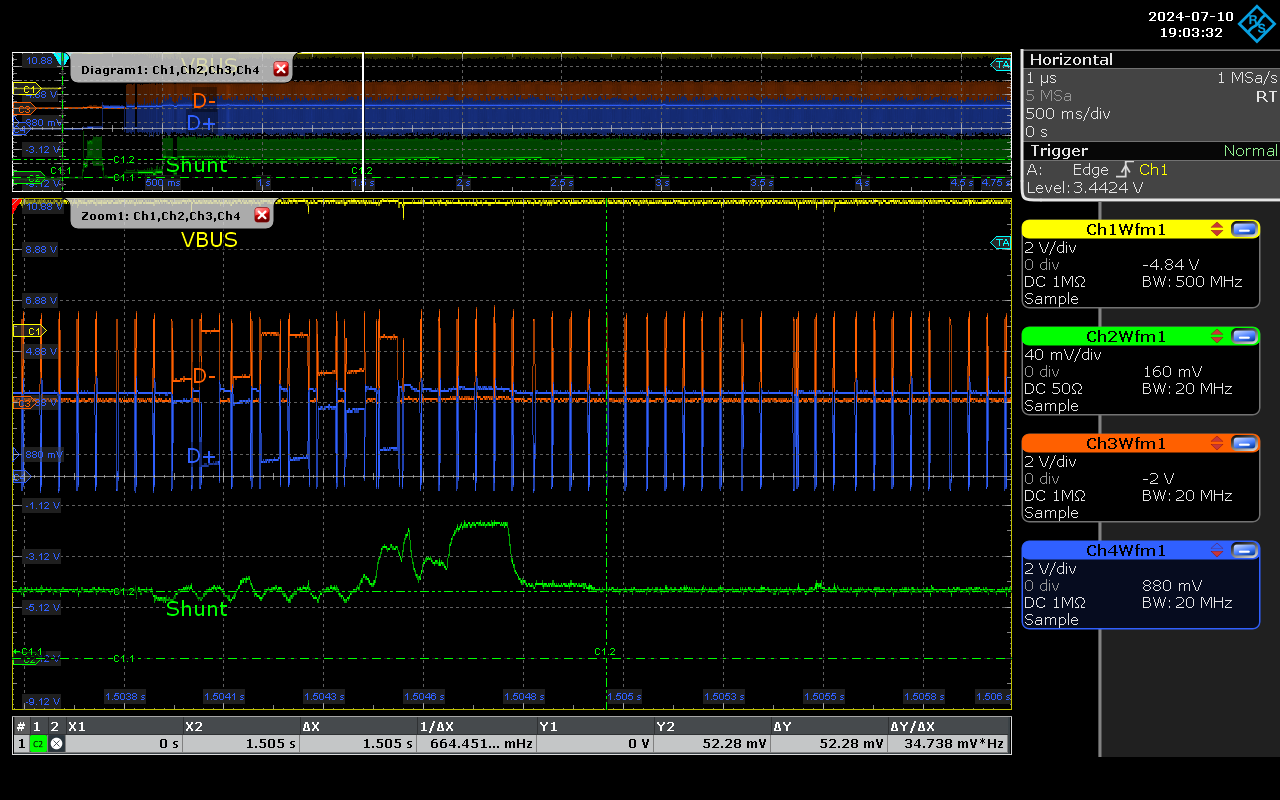

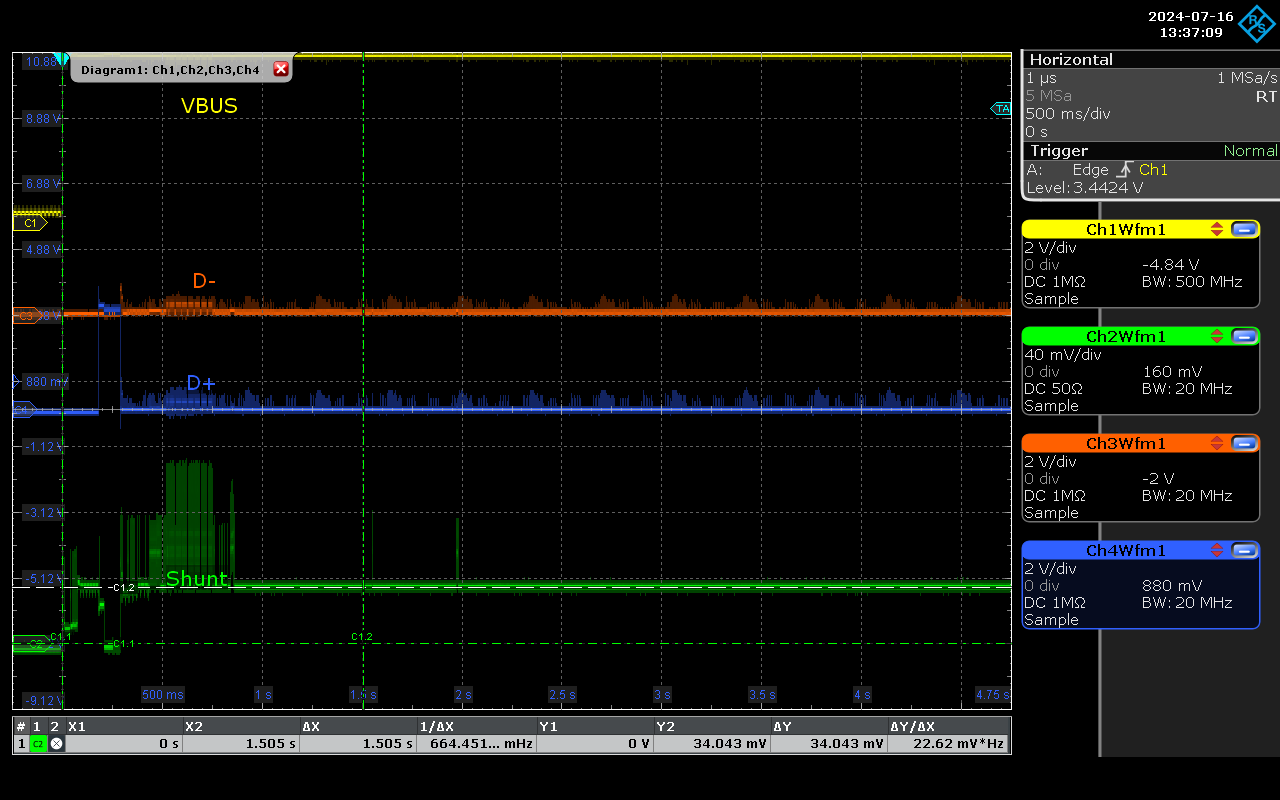

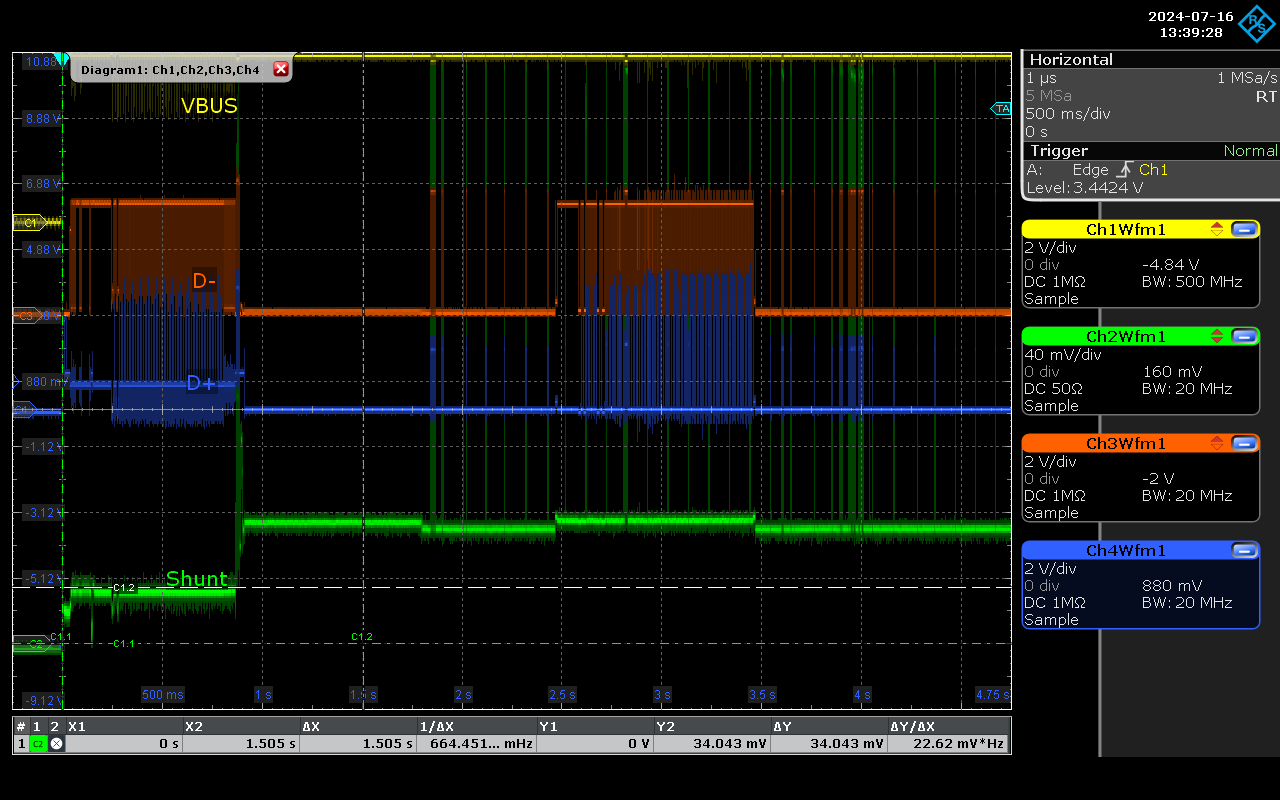

As the lines are exposed and unshielded, there is some crosstalk between D and power ones, which is visible on the traces below. All oscilloscope measurements are triggered on VBUS becoming active which is equal to device being plugged in. Shunt is 1 Ohm, therefore the values displayed correspond 1:1 to current (so, mV is mA).

Low resolution power analysis.

Measuring current consumption using a tool similar to a multimeter, without analyzing trends over time, can help detect higher current consumption broadly. This method identifies devices with additional power-consuming components, such as WiFi or Bluetooth modules, which may indicate suspicious activity. However, there (afaik) isn’t a centralised database showcasing the various current consumption of all the devices we use, so how do you know a device is using more or less power than the manufacturer originally designed?

Our measurements for various devices were as follows:

- Normal Keyboard: 20mA

- Normal Keyboard with Pass-Through Implant: 64mA

- Rubber Ducky: Initially around 60mA, later 10mA (for comparison, a normal flash drive draws 30mA)

- O.MG Cable: 70mA

While there are noticeable differences in current consumption, they are not sufficiently significant to provide conclusive results. For example, the Rubber Ducky, despite functioning as both an HID emulator and a storage device, draws less current than a regular keyboard. Both the O.MG Cable and the implanted keyboard require more power due to their wireless connectivity, which can be flagged as suspicious. However, given the necessity of a USB proxy to effectively implement this detection method, it becomes clear that a more sophisticated approach is warranted.

High resolution power and bus analysis.

One area where Edge ML would be effective is that of high-resolution analysis of current changes over time, offering a more detailed and accurate method for detecting malicious HID devices. Additionally, monitoring the D+ and D- lines (data lines) is beneficial, as some devices will not enumerate until they are actively used. Detecting their presence requires observing the electrical signals on these lines. We dive into this later on in this paper.

Normal keyboard.

This shows a very standard enumeration process, where the current stabilises at about 20mA when device enables endpoints 1 and 2, switching into a normal operating state.

Implanted keyboard.

In this case enumeration does not happen (similarly to the way O.MG cable works), but there is a stable current draw with no activity on D lines as the device is configured to wait to connect to a C2 before enabling pass-through:

At a software level (analysed from OS), it would not show at all, as any erroneous signals on D lines, coming from noise, would be discarded at a host controller level.

Rubber Ducky.

Our static measurement showed average current draw to be around 10mA, however it can be seen here on an overview that the current level changes are dynamic:

This discrepancy arises because the device essentially comprises two components and includes a general-purpose MCU (Microcontroller Unit). The MCU performs various background tasks even when idle, contributing to the overall power consumption. A closer examination reveals activity on both the current and D-lines.

As we are analyzing the analogue domain (without considering the meaning at the higher communication level), we can infer that if this device presents itself as mass storage, it is, at the very least, unusual when compared to a regular USB storage device:

O.MG Cable.

This device is particularly interesting because it typically does not enumerate (similar to the implanted keyboard described above) until it is needed. In the example below, this occurs around 2.5 seconds:

As the traces, patterns, both on power and D lines are quite unique, having this resolution of analysis would enable detecting and preventing any malicious usage of this port.

Evaulating protocol characteristics.

Each USB device identifies itself with two - unique to vendor/model combination - parameters: PID, VID (product ID, vendor ID). This functionality’s purpose is to help host identify the device and find and load the correct drivers. In the first years of this attack being public, it was possible to detect malicious devices this way (as third-party USB controllers would have their own PID/VID combinations), but this was quickly remediated with microcontrollers (USB controllers used inside, or along them) allowing to set a custom PID/VID combination. Still, it is important to note that most of cheap devices (and low-effort attackers) will not bother changing those parameters, therefore it is important to constantly look for and block them at endpoint level.

Detection/prevention methods: summary.

Given the aforementioned considerations, we present a list of techniques that exhibit varying degrees of effectiveness. This paper has been created to better inform the community on such attacks and place an emphasis on possible detection methods that could be utilised in order to prevent compromise.

We propose combining these methods, assigning appropriate weights to each, and employing a machine learning system to evaluate the risk and determine the necessary actions.

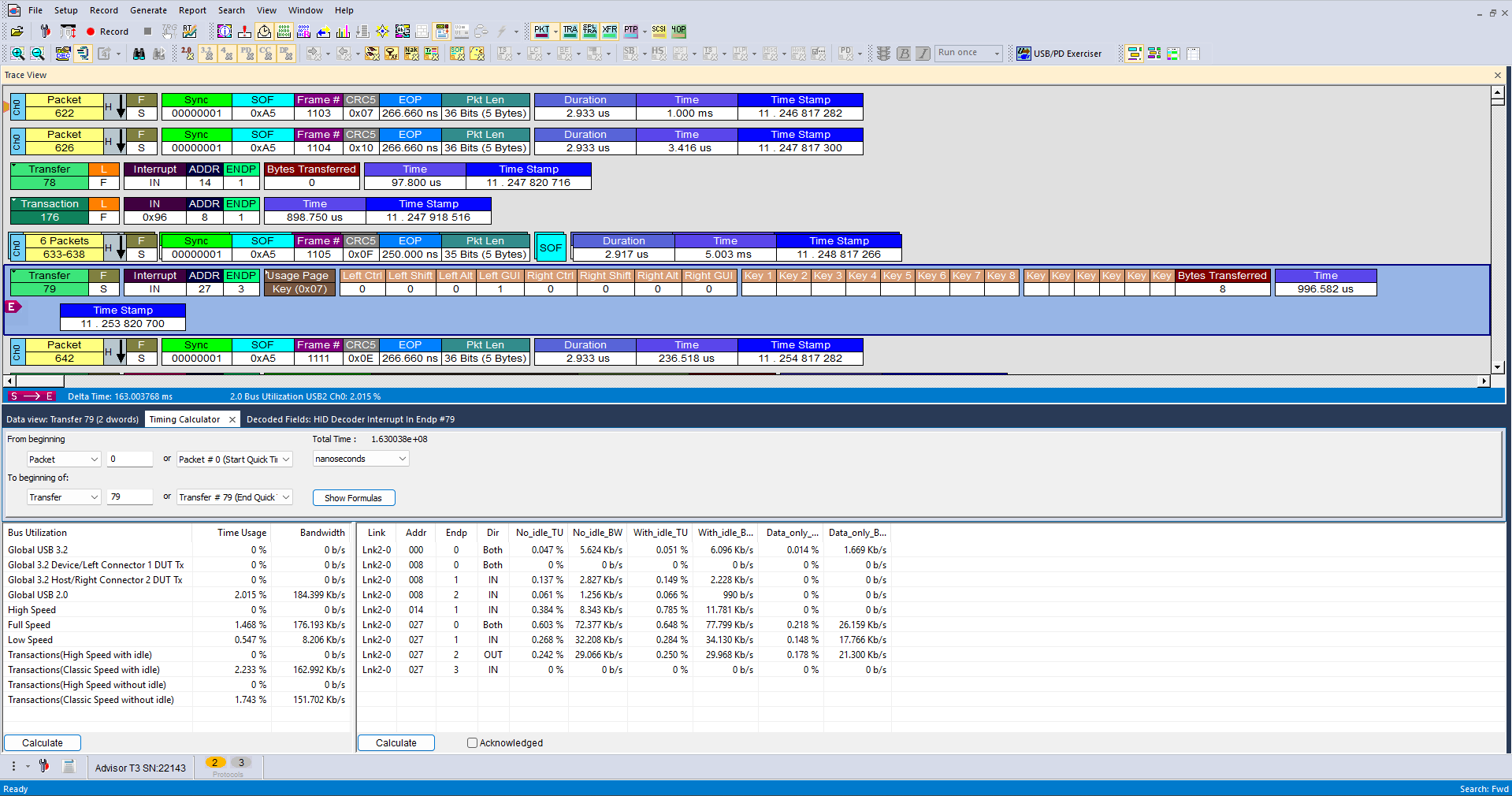

This type of attack typically relies on executing the payload swiftly, minimizing the user’s chance to notice and react. Consequently, preventive measures often transition into post-factum detection methods:

In the above example, it takes 167 milliseconds from the moment the device is inserted into the port until the first keystroke (left–GUI key, or Windows key) is sent. While the entire payload execution takes longer, the focus here is on the fact that if a malicious device reaches this point and sends the keystroke, preventive measures have already failed. Detecting the exact commands being typed or executed falls outside the scope of this document.

Due to the brief execution window, we consider proxy-like solutions in both software and hardware domains. These solutions involve taking control of the enumeration process and placing a physical device between anything the user can plug in and the workstation. This is because most detection methods rely on the successful completion of the enumeration process, and if a malicious device is detected, normal data exchange must be blocked.

However, there exists a considerable cost and implementation cost associated with such devices, especially hardware-based solutions.

Proposed Detection Techniques

Detecting malicious devices is a complex challenge; however, the following techniques could be considered to enhance detection efforts.

-

Control of Enumeration Process: Implementing software solutions that monitor and control the enumeration process of USB devices. Any anomalies detected during this process can trigger an immediate block or alert. This could also expand to using hardware intermediaries that intercept and verify the legitimacy of USB devices before allowing data transfer.

-

Timing Analysis: Analyzing the timing characteristics of USB device activities. The rapid succession of events typical of HID attacks can be flagged as suspicious. This in itself isn’t foolproof as we shall show later on in this paper.

-

Machine Learning-Based Anomaly Detection: As Edge Machine learning (edge ML) advances, there exists the potential to train models on normal USB device behaviors to identify deviations indicative of potential attacks. Assigning risk scores based on the aggregated data from various detection methods, enabling automated decision-making regarding the legitimacy of the device.

-

Behavioral Monitoring: Continuous monitoring of USB device behavior post-enumeration to detect unexpected actions or command sequences that could signify malicious activity. This could be achieved via the Operating System (OS) or, if supported, modern EPP solutions.

-

Device Authentication: Implementing authentication mechanisms to verify the identity and integrity of USB devices before granting access to the workstation.

These techniques should be integrated into a cohesive detection strategy, leveraging the strengths of each method to provide a comprehensive defense against HID attacks.

As mentioned above, there might exist a way to leverage Edge ML in order to detect the above by gathering baseline data from a variety of legitimate USB devices. This should include different types of keyboards, mice, flash drives, and other peripherals. The data should capture:

- Power consumption patterns.

- Enumeration and communication sequences.

- Keystroke timings and intervals.

- D-line activities during normal operation.

This should also include malicious devices too in all various stages of offensive capability. Key features we’d be interested in are:

- Power Consumption: Average and peak power draw, fluctuations over time. Such as sending payloads, exfiltrating data and so on.

- Timing Analysis: Time intervals between keystrokes, enumeration timings.

- D-Line Activity: Patterns of electrical signals on D+ and D- lines.

- Behavioral Patterns: Frequency and sequence of commands or data packets during offensive operations.

Once collected and used to train, the adoption of lightweight models like decision trees, random forests, or even small neural networks could be effective. This is an ongoing research direction this team is undertaking and will update the report in due course.

O.MG Cable.

O.MG Cable is a HID–emulating device made to look like a regular Apple charging cable:

One of the above pictured is a regular cable. Can you tell which one it is?

Not easily and this is by design.

When compared side by side, there are some differences, particularly in the size of the connector housing. However, the O.MG cable is virtually indistinguishable from a regular cable on its own. These cables come in various connector versions, from the common USB-A to Lightning, to the more modern USB-C to USB-C. This is particularly relevant since Apple introduced USB-C connectors in iPhones, meaning these cables can be used not only by Apple users but also by anyone with a recent mobile phone.

Again, only one pair of connectors above is regular.

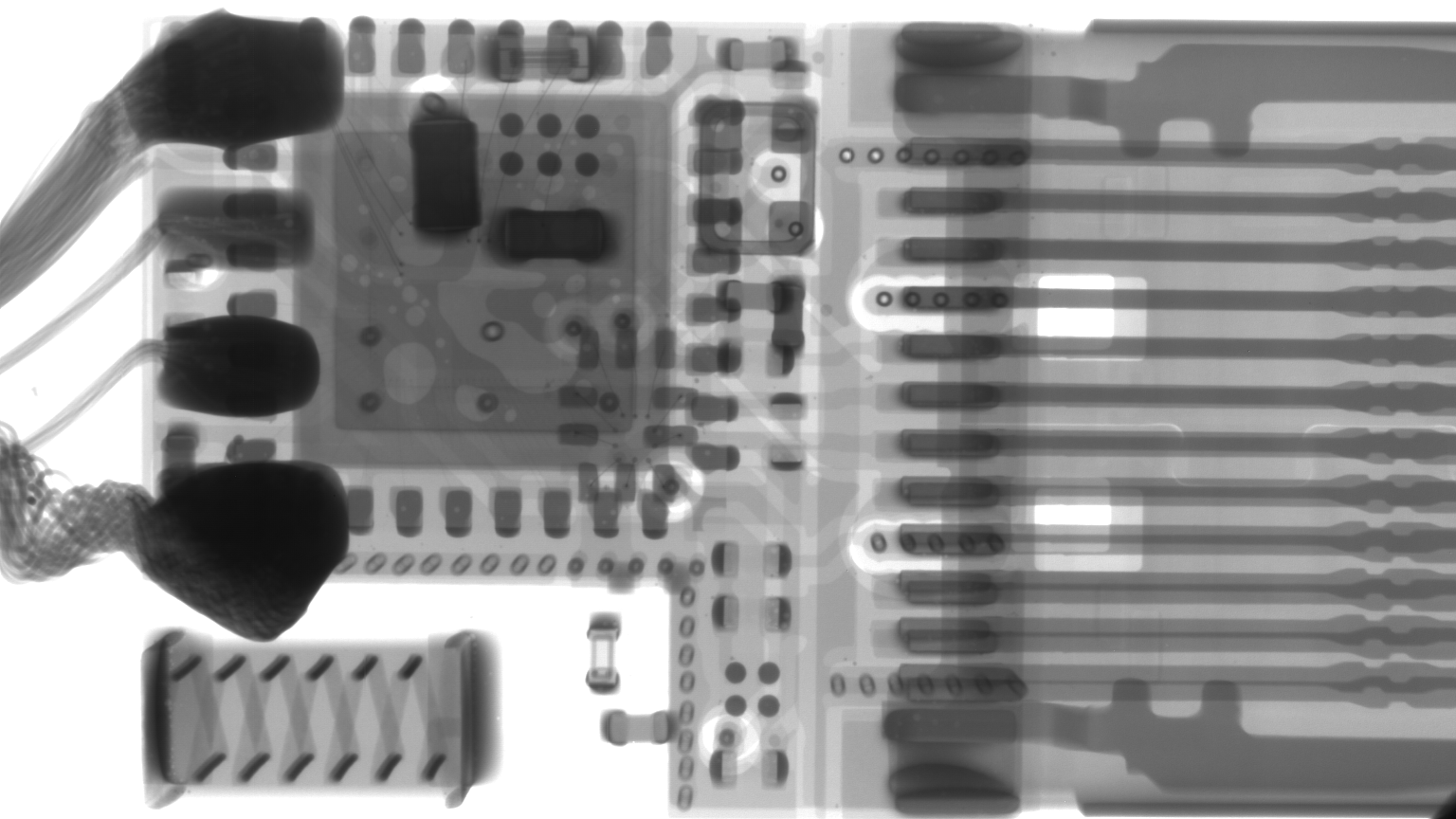

The difference can be seen under X-Ray, the active side of O.MG:

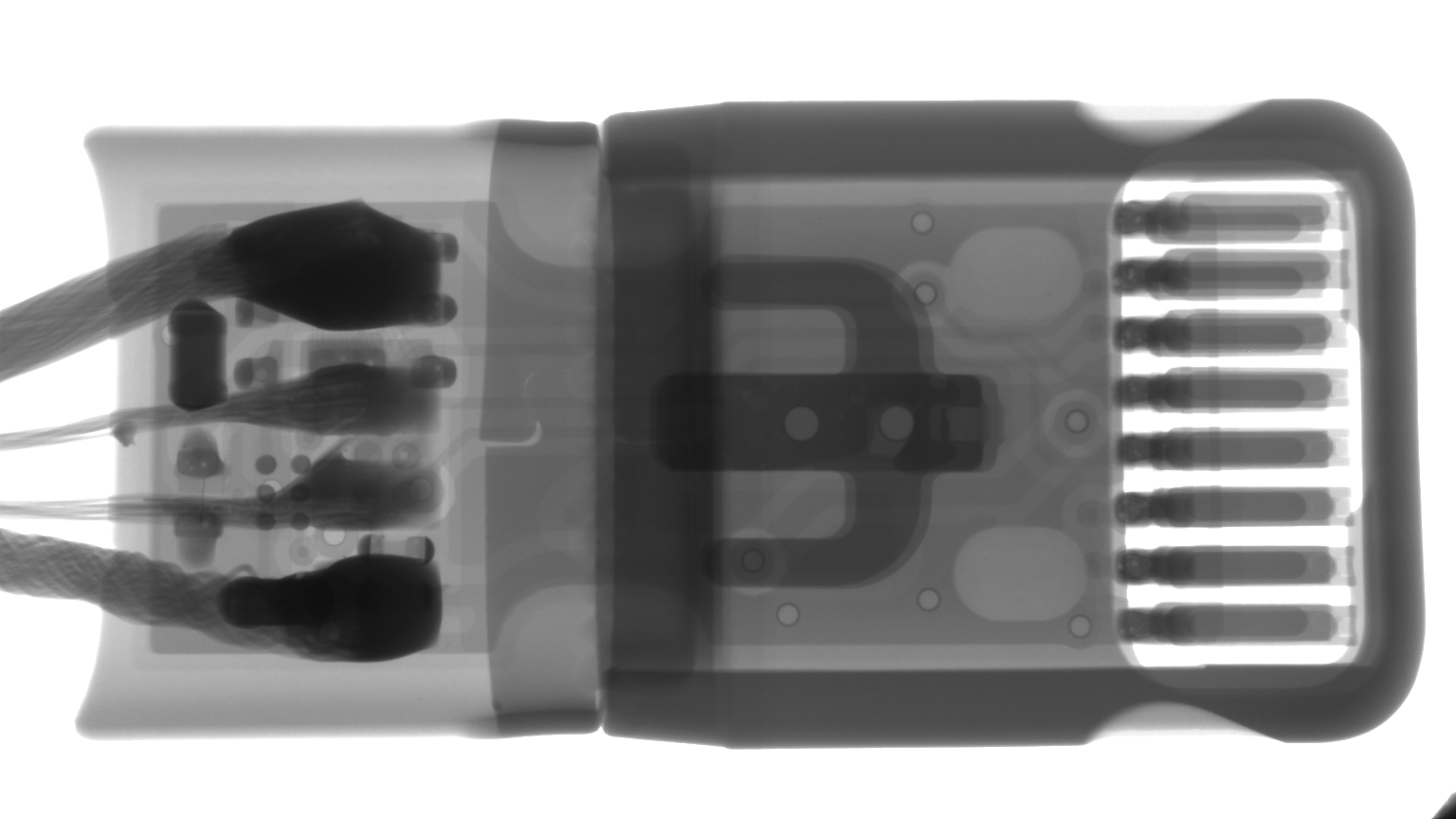

versus a normal cable, same connector:

The closeups show very good engineering of the working part:

and the Lighting side:

The main purpose of the device is to inject keystrokes, either upon being plugged in (with a programmable delay) or remotely, controlled via a WiFi interface (the device contains a wireless Access Point). Unlike typical devices of this kind, it does not remain present and connected unless needed. For example, in the trace above, around the 2.5-second mark, we can see the payload being executed: the HID device is enumerated, keystrokes are sent, and then the line goes silent.

We are not listing all advanced features of this device as it is not the scope of this research, but we would like to highlight some of them from the point of detection/prevention:

- Customisable identification parameters (PID, VID, Manufacturer, Product, Serial Number).

- Mentioned above: keeping the device enumerated only when needed.

- Additional stealth features: geofencing (triggering a payload when in range of set wireless networks), self–destruct (erasing the device after executing the payload).

Initially, we have not been able to decode USB data - neither with Teledyne’s Advisor T3 USB analyser, nor with RTO2014 R&S oscilloscope. We do not believe this is a purposeful evasion technique, but it definitely threw us off for a couple of weeks.

Detection/prevention

The only reliable methods to prevent the O.MG Cable from being a successful attack device involve using a USB proxy as described above, either hardware- or software-based. (Again, we consider an attack to be successful if a device is able to send any keystrokes, regardless of their purpose). A combination of:

- Very good external design: we have to mark our test cables (even for us, it is hard to know for sure if a certain white cable is a regular, or O.MG one),

- Customisable identification parameters - so it can look like any USB keyboard,

- Enumerate only when needed: either with a delay or remotely–controlled, so on initial plug in, it really is just a charging cable.

All of the above makes it impossible to detect using standard methods. We feel it is important to give serious kudos to MG here; the O.MG Cable is what we consider to be the Rolls-Royce of offensive cables as a result. The non-standard detection methods that would work include:

- Protocol Analysis: As mentioned above, each device has unique characteristics at the packet level that are intrinsic to the USB controller used and cannot be changed (except for devices like the one described in the Introduction, which match all packets one-for-one). It would be possible to detect it based on these characteristics.

- Low-Level Electrical Analysis: As observed, the O.MG Cable generates significant noise on the power and D lines; from a human perspective, it simply “looks weird.” Additionally, it draws much more power than a regular keyboard, which should be a condition for blocking its enumeration altogether.

Both solutions would require an ML approach, as all static method could be easily adapted to, and evaded.

Summary.

In research terms, we often joke about going “down the rabbit hole,” but in the case of this particular study, it was almost impossible not to. As Lewis Carroll aptly wrote in Alice’s Adventures in Wonderland: “If you don’t know where you are going, any road will get you there.” This encapsulates the complex and often unpredictable journey of delving into the intricacies of detecting malicious HID implants.

There are numerous avenues for us to explore, build, develop, and prototype detection methods for all kinds of malicious HID implants. We are actively pursuing deeper protocol analysis as a means to detect the O.MG Cable and other emerging threats.

It is evident that more can be done at the Operating System level, both at the hardware layer and in the detection and prevention mechanisms. This is another crucial area of exploration for us in the coming months.

Until then, stay vigilant and be cautious with what you plug into your devices. You never know what it truly says it is.